BACHELOR PROJECT

Affiliation: The Royal Danish Academy of Fine Arts

Engine: Unreal Engine 5

Platform: PC

Team size: 1

Role: Everything

Tools: Blueprint, Quixel, Eyetracking

Exam grade: 12/A

This is was my final project for my bachelor. I worked on it for ~4 months.

A project which focused on how to avoid handholding in Video Games, specifically through Level Design and Game Design. It showed how to better facilitate player autonomy by removing factors that handhold and adding those which adds to players sense of autonomy.

I also published a paper based on this project, called "Let the players go!".

Abstract:

In the field of psychology, autonomy is considered to be a basic need for humans to feel satisfied with their lives. With this in mind, it is not so strange that games, which give players opportunities to act out imaginative scenarios, can be great tools to facilitate these. There are many tools developers use which support player-autonomy, as well as some which can stand in the way.

This project explores and tests the potential of how simulation is a mean to support instances of autonomous player-experiences, and how it can be used to avoid succumbing to overexplaining every information to the player, which can result in lesser autonomous experiences. The first part of the paper accounts for the fundamental theory of autonomy, the dangers of handholding and then what simulation can achieve regarding autonomy. The second part looks at two state-of-the-art games, each giving inspiration to what I will do and what I will not do in developing the projekt. The third part accounts for the choices made and production of the current version of the game-slice, and the last part tests this game with the intention of evaluating the autonomous experience of it. Overall the findings suggest that the use of simulation to facilitate autonomous experiences has potential, even if the current version contains weaknesses.

Problemstatement

How can I design a videogame where the player experiences a high degree of autonomy over their problem-solving, via simulating realistic and consistent inner-logic?

Sub-questions

1. How will the game communicate information to the player through simulated elements, while balancing the aspects of the information so the player feels that they themselves discover problems and their solutions on their own?

2. Because I cannot simulate the real world one-to-one; which elements are more important to simulate?

3. How do I use the chosen elements from question 2 to answer question 1?

Theory

![IMG20220914133528[7819]_edited.png](https://static.wixstatic.com/media/6ee4a5_ccb93df99ddd4e77a49d488d3a0e5606~mv2.png/v1/fill/w_672,h_378,al_c,q_85,usm_0.66_1.00_0.01,enc_avif,quality_auto/IMG20220914133528%5B7819%5D_edited.png)

Here I had a moment characterized by an intense feeling of having many possibilities open to me, which was not directly clear in front of me. As a game designer, I of course imagined what a game would look like where you could interact with the world as you expect, and thus set your own rules and goals (or at least feel like you do).

Autonomy

This feeling of having opportunities that fit into your own personal values, is called Autonomy.

According to the book Glued to Games, by Scott Rigby and Richard M. Ryan, autonomous experiences in games have two criteria's:

Meaningful choices. Having opportunities that are meaningful to the player.

Volitional engagement. The opportunities are some the player actually wants to act out.

The last one is really important, and is the cornerstone of the project.

This is here simulation comes in, as I argue it facilitates volitional engagement.

Simulation

In games the player needs motivation, and one way is to reward them and support their thought process.

One part is that the interactables should react the way the player expect them to, and thus their use can be identified without the game explicitly telling them. This is where simulation is important.

Hand-holding

In the context of this project, I defined the term hand-holding as:

the games explicitly telling the player what to do, how to do it and overly communicating things the player could figure out themselves.

One big tool of communicating information, that I wanted to avoid, was the UI. In many games currently there are quest-logs and markers that removes the need for players to figure stuff out for themselves. In real life, there are no quest markers, yet we effectively navigate it anyway.

Simulation negates hand-holding

Simulation is a way to avoid hand-holding, as there are many realistic tools to implicitly communicate both navigational cues and how the players direct interactions affect the world around them. This is based on the notion that the player already has relevant knowledge from the real world.

Let me give some examples:

We know if we throw a rock, that it will fall down. We also know it can destroy a window. The game only needs to support this expectation. This is simulating logic in interactions.

Likewise we know that we can navigate by looking for streetnames, numbers or even just natural things like the placement of the sun or that sand are usually found near water. All this is simulating navigational logic.

Affordance

One realistic tool from the real world I used a lot to implicitly direct the player and communicate goals was affordances. Affordance is the opportunity an observer can understand by just looking at an object. For example, the chair "asks" the observer to sit on it.

Negative support

If the player expects something to react a certain way and it doesn't their illusion of it reflecting their intuition is broken. This important illusion is called suspension-of-disbelief and the players experience of this was going to be an indicator of whether the game was successful at facilitating an autonomous experience...

Development

Choosing mechanics

The criteria for choosing which elements should be simulated and implemented, was:

If their absence would interfere negatively with the players suspension-of-disbelief

Because the game doesn't explicitly tell the player what to do, and thus has to find out themselves, the player needed to know their interactions would act simulated / have the expected result. Therefore this illusion had to be kept.

I made a list of elements which absence I thought would interfere with my own suspension-of-disbelief, knowing I would playtest it and iterate later.

Underneath you can see the self-testing level, with many of the simulated elements

Level Design

When I begun having a set of mechanics and dynamics, I started iterating a flowchart on a Miro Board.

The layout on the Miro would change drastically and be followed loosely, as when I would create it in UE5 new problems needed to be overcome and addressed which had not been foreseen during the making of the flowchart.

The playerexperience I wanted was:

The player will feel like they are exploring both the environment but also opportunities by using logic to deduct which elements can be used for their goals.

Thus considerations for the level design became:

Considerations for the level design:

-

Affordances of objects chosen for each interactive element. E.g. contrast of handle on drawer.

-

Variety in use of mechanics so the player doesn't feel they are doing the same over and over

-

Some mechanics used sparingly, to create a sense of exploration of something special

-

More than one way into most of the houses

-

Leads(spor) should lead to other leads or objects, but not tell explicitly how

-

Visibility of interactive elements

-

Tutorial intro to the axe

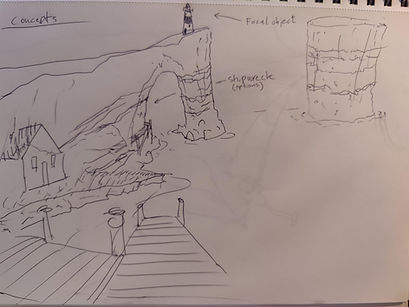

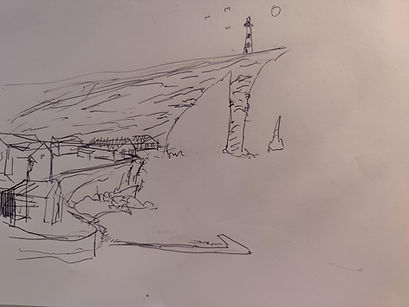

Iterations - from sketches to greybox

Considerations for the environment design:

-

Non-interactables / statics should not have any affordances

-

Environment should direct the player implicitly

-

The triangle-shaped cliff is pointing towards the end-goal, and suggests danger through shape-language

-

The lighthouse is a landmark used for directions in real life and thus also in the game

-

Composition to direct the player navigation towards points of interests.

-

-

The houses have specific identities, so it's easy to navigate because you know where you are.

-

The ocean and cliff acts as realistic limitations of the playable area.

-

Placing playerstart, first house and sections to pace the players natural progression in the semi-open world.

Playtest

The process was iterative and I worked very closely with playtesters, almost collaborating with them on making the experience more autonomous.

Evaluating the game was divided into 5 fases.

5 fases of testing:

-

Self-testing

-

Eyetracking

-

First playable playtest

-

Iteration playtest

-

Final playtest

Self-testing

As I programmed the mechanics, I would test their responsiveness, feedback, affordance* to make them feel intuitive to use.

Eyetracking

Without going into every detail, as the area of eyetracking is a big one, I will explain the purpose and results briefly.

The first eyetracking session gave me insight into what elements stood out amongst others, giving me ideas to implicitly direct the player with: highlights, contrast, light, color and most importantly: affordances.

The second one was to test an important hypothesis:

If the player are explicitly told that their role is to act as a detective, they will be more likely to interact with the elements that are interactable.

In it the tester had to click on elements they would expect to be interactable. I devided the 8 testers into two groups: 1st group knew it was a detective games. 2nd did not know it was a detective game.

The findings were very promising. In fact it showed fx that group 1 clicked on chairs while group 2 did not. I interpret it as they do not expect a chair to be a path to advancing the detective mystery.

Playtesting first playable

As the game reached a playable state, I needed to evaluate what I had made and thereby the theories behind my design choices. After all it is the human interaction that makes games games.

I used these methods for the playtesting:

-

Playtesters had to think out-loud

-

Playtesting with confidants and strangers

-

PXI based questionaire, with Likert scale.

-

Asking specific questions related to each playsession

Underneath you see the most important results of immersion and autonomy:

Iterating with playtesters

The most important fase. Here the only aim was to test as many as possible and improve anything that would result in breaking their suspension-of-disbelief. Fx it took only one tester who wanted to break boards by throwing rocks on them for me to implement it.

Things I added:

-

Breaking boards with rocks

-

Jumping

-

Running

I would also removed or changed anything that should not be interactable but the player thought it was.

Final playtest

Of course at the end I wanted to know how the newer version compared to the results of the first, so I did a similar test with the same methods as the first.

Underneath is shown the results of the questionnaire:

It is clear from comparing the two tests, that I had massively improved the feeling of autonomy and immersion as well as learning the controls quicker.